Abstract

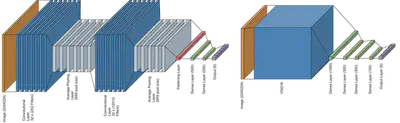

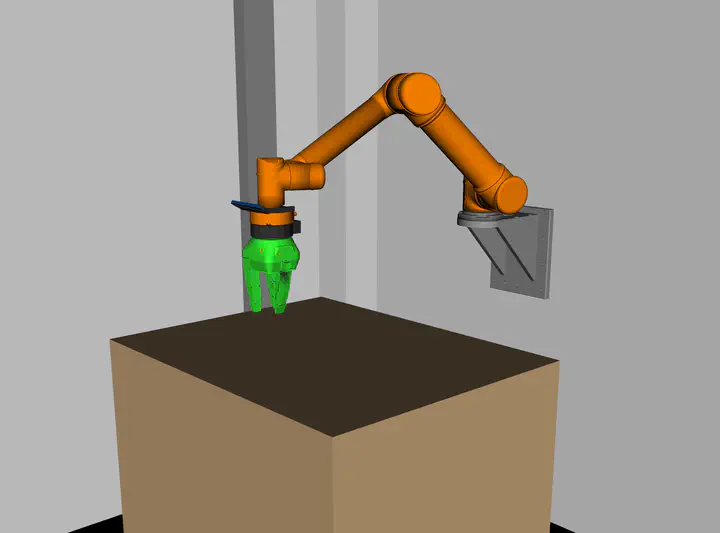

We present a neural end-to-end learning approach for a reach-for-grasp task on an industrial UR5 arm. Our approach combines the generation of suitable training samples by classical inverse kinematics (IK) solvers in a simulation environment in conjunction with real images taken from the grasping setup. Samples are generated in a safe and reliable way independent of real robotic hardware. The neural architecture is based on a pre-trained VGG16 network and trained on our collected images as input and motor joint values as output. The approach is evaluated by testing the performance on two test sets of different complexity levels. Based on our results, we outline challenges and solutions when combining classical and neural visuomotor approaches.